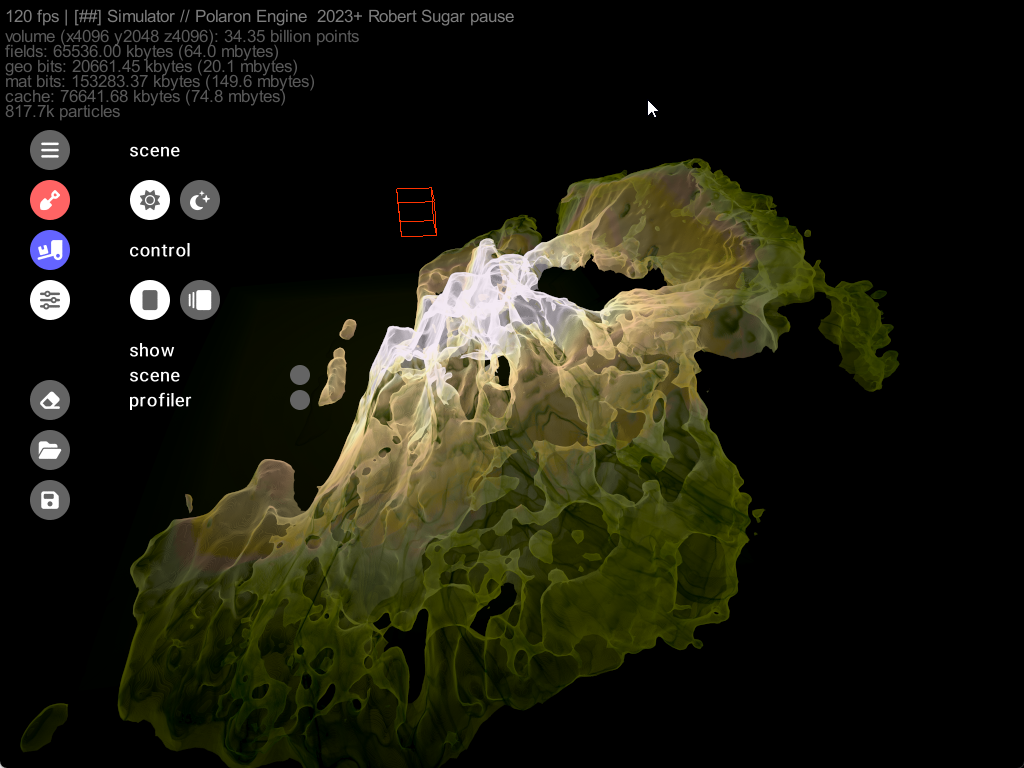

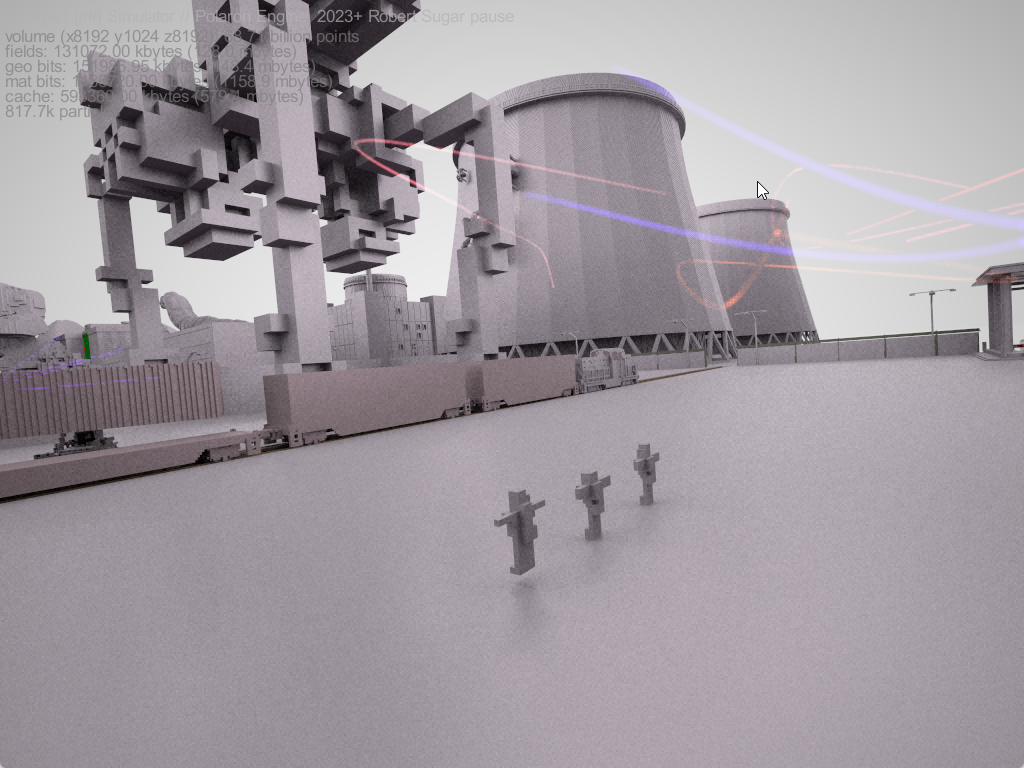

A Voxel is a 3D pixel – like the ones’ your viewing now. They can be packed with RGB values to give colour, but also other data, such as strength, occupancy, material, attenuation, etc. It is this capability that allows us to produce a real-time raster that can act as both terrain and simulation layer, on which to traverse agents, cellular automata and more.

We built Polaron to serve our own use case needs when we struggled to deploy available Geospatial and Games Industry tools to simulate and present in terabyte and petabyte byte scale spatial scenarios:

- Game engine challenges:

Games Industry tools perform well in small scenarios (they were designed to do that). They are fast and provide fascinating visuals. But they break down in the scale we operate in or when we flood them with real-time data; - Geospatial tool challenges:

Geospatial tools do cope with the scale but fall behind on features, speed and overall accessibility and look.

Polaron builds in the middle where the gaps are. In voxelised environments we can compress spatial data efficiently while keeping access and traversability and real-time performance.

It is this mix of capabilities – architected from the ground up – that permits a robust, stable and real-time sandbox in which to perform GIS analysis, Wargame out Scenarios and Play amongst complex interactive and destructible terrain – at very large scale.

Often asked Pros and Cons of Voxel based Simulation & Gaming.

So moving beyond theory (a circle can be drawn from an infinite number of cubes) – it is possible to create high enough density voxelised terrain to support near real-life models. However sadly life and compute comes with a budget

| Pros of Voxels | Cons of Voxels |

| Voxels can represent fine details and smooth shapes very well, especially when compared to triangle meshes. Additionally you can pack individual Voxels with attributes – such as hardness, transparency, resistance or attenuation | Voxels can require can require a lot of memory to store, especially for large grids or grids with high resolution. This is an inherent limit to most 3D processes – in effect the budget. We have optimised our Voxel engine to squeeze over 60 Billion Voxels into a scene whilst still being able to run real-time processes on a single instance. |

| Voxels can be easily transformed, scaled, and rotated without loss of detail. Which means we can render different resolutions and use adaptive scaling to conserve budget for finer detail – or pass data from different scales and “embedding” a volume within a larger voxel scale – that can be unpacked on demand or between scales | Voxels can be computationally expensive to simulate, especially when compared to other methods such as particle-based simulation. We believe we’ve created the most efficient methods for voxel based simulation (in it’s class). This point is more of a apples vs pears debate – since the particle based simulation can’t store the volumes of data we want to use, and is intended for a different type of simulation. We can also support multiple types of simulation inside of the Voxel engine – such as splines and particulates, smoke etc – rendered via the Voxel engine or as an effect – encapsulated as a voxel. |

| Voxels can be easily edited, such as by painting or sculpting, using simple algorithms. We have two editors already – with a third underway. One is essentially a scripting language to allow you to determine what data to pack where (e.g. Road class, building type, material, etc). The other is a sculpting tool – that allows for painting and editing and demolition of scenes. The third isolates objects to allow them to be fine tuned and data painted in. E.g. Add in Windows or change the material values, add in heat sources and repulsors or attractors, edit meta data (e.g. fear or other emitter). | Voxels can produce blocky artifacts, especially when viewed from far away or when the grid resolution is low. This is true – and since Voxels are often used in rendering pipelines to work out the K-nearest neighbour problem – a factor in the end presentation. However as demonstrated by the success of Minecraft, Teardown, Battlebits Remastered, etc… the aesthetic of a lower fidelity presentation is trumped by the capabilities it affords. The insight and complexity it offers allows far more interesting simulations and gameplay than a wonderfully realistic, but ultimately hollow set of objects. Many of the most successful games, aren’t photo-realistic and don’t rest on their graphics but their gameplay and experience. These artifacts can be addressed procedurally to enhance the aesthetic – down to the LOD budget for each scene. |

| Voxels can be efficiently processed using parallel algorithms and hardware, such as graphics processing units (GPUs). This allows us to choose whether to run processes on the CPU or the parallelised GPU. Typical COTs GPUs split up tasks (*often in 4) allowing parallel processing. This allows us to create and scale intensive tasks – such as demolition and physics and push this into the GPU to support real-time destruction and complex interactions. | Voxels can be difficult to work with when it comes to maintaining topological correctness and surface orientation. This is an interesting challenge, since a Voxelised Mesh is a discretised grid – in which data has to fit. However if you consider a satellite image (e.g. DTM / DSM) this has undergone multiple transformations (often including a K-nearest neighbour) step from the lens and sensor of the orbiting sensor to compile an image to the GIS tool uses to composite and assemble that into a usable image. Therefore we believe that these issues are highly subjective and inherent to all GIS / EOS derived data. Since the spatial resolution can also be edited – it is also possible to upscale – e.g. taking 3m resolution IR EOS data and unpacking it into a 1m2 semantic map. |

| Voxels are an accurate 3D Building Block as they mimic particles. Voxels can be used to represent space, as well as the interactions to other particles or voxels around them. Stickiness, force and the passage of interactions are far easier to simulate. Partly why Voxels are used in Geophysical models, Biology and Fluid models. They are arguably the fastest way to model and visualise data from an input data source such as a lidar or scan. | Modern hardware isn’t tuned to render voxels This isn’t strictly true, whilst Polygons are the focus – GPUs are now used as much for AI / ML as rendering. We don’t use much of the Nvidia Software stack, focussing on the maximised utilisation of the actual hardware (above bare metal, but in C++) allowing us to tune the GPU and CPU interactions to get hitherto unachievable details. We are at the comparable limits of point clouds (circa 60+ Billion points) which are often then converted into a mesh, to reduce the point count – and use Polygons to fill in and texture the gaps. |

Some features of Polaron’s Voxel engine

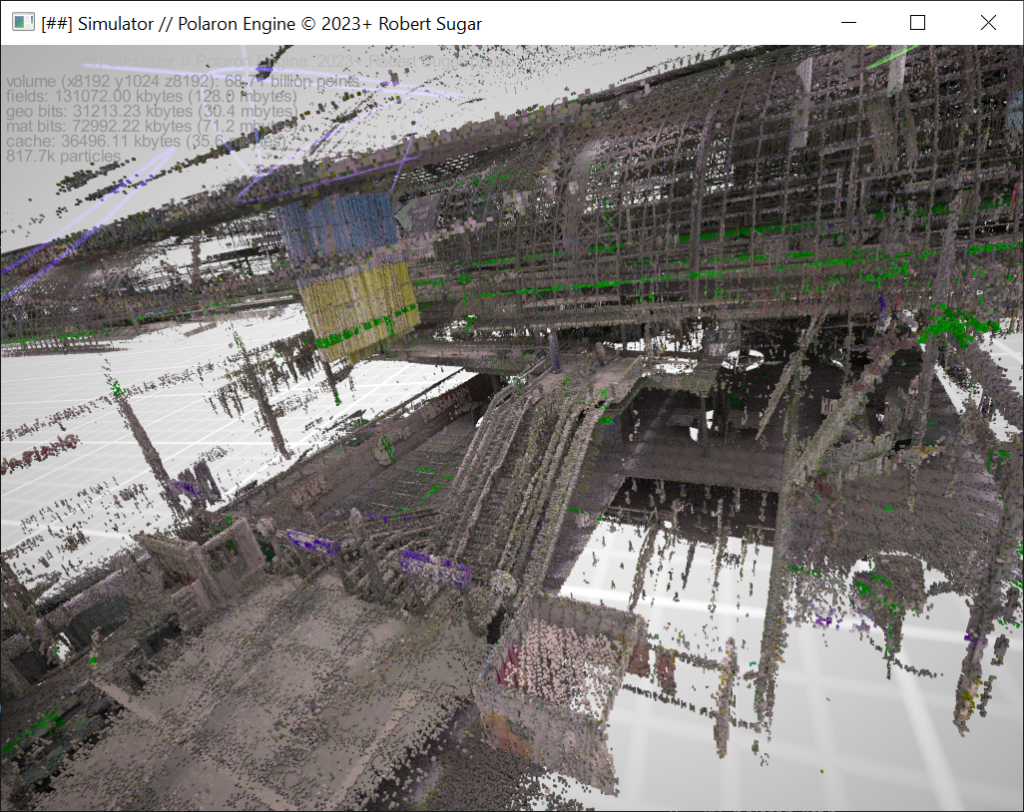

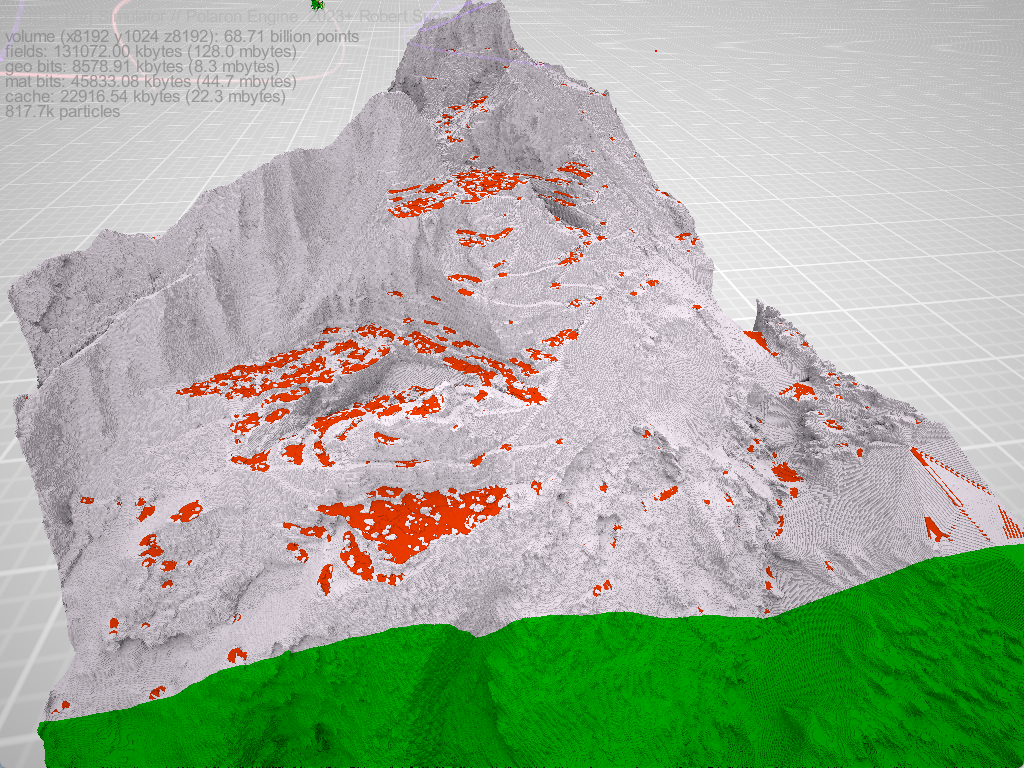

Turn Lidar into 3D data – including procedural terrain models

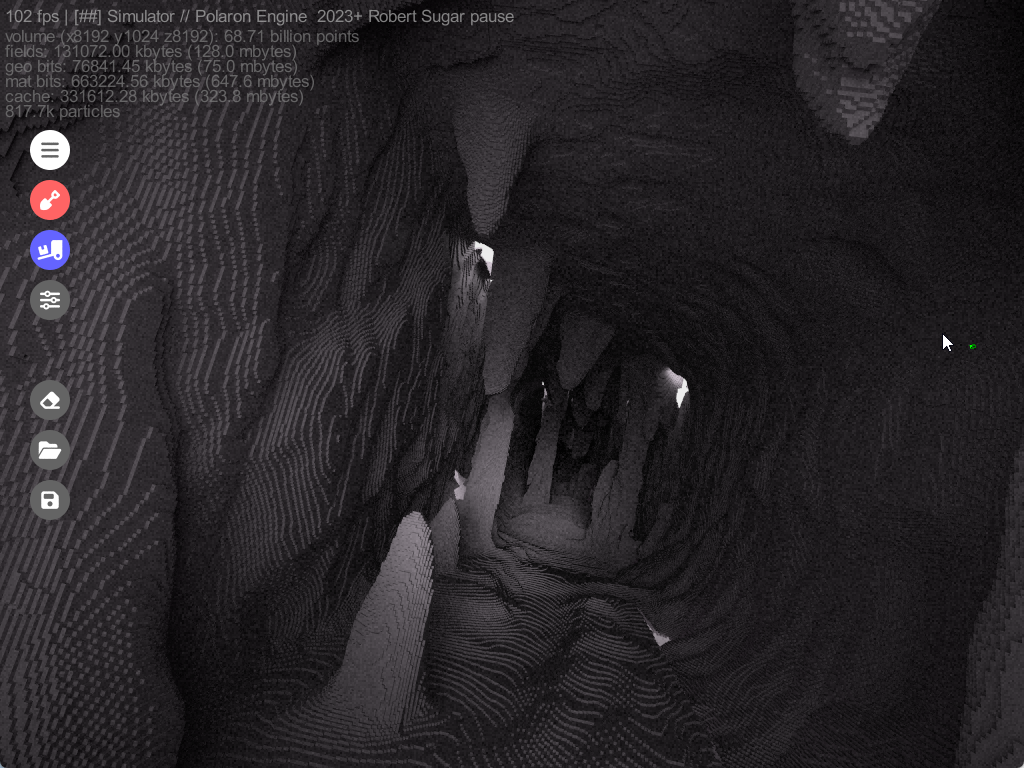

We ingest Lidar and convert it to a Voxel Simulation based on Lidar & EOS with high spatial resolution allows us to to model synthetic terrain around a wide range of scans – from handheld slam systems, to UAVs and EOS capabilities.

Fusion of data to make the systems in the terrain realistic

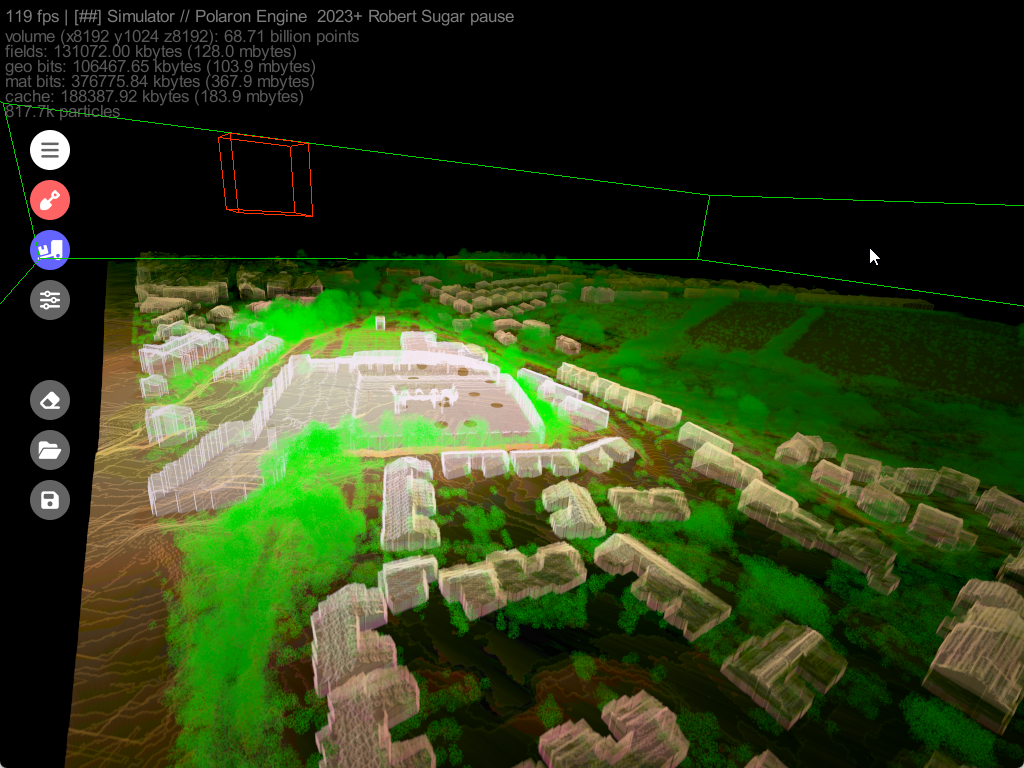

Combine EOS & GeoSemantic data to create a living sandbox. It’s not just the terrain, but the systems, buildings (and materials) and people that occupy it – that allows insight to be derived and serious simulation to be powered.

Above and below the ground modelling

Don’t just model the surface – but examine sub-surface systems and their relationship to the surface systems and infrastructure. Propagate through a volume and use the scan data to assess the terrain and dependencies and potential sub-t interfaces.

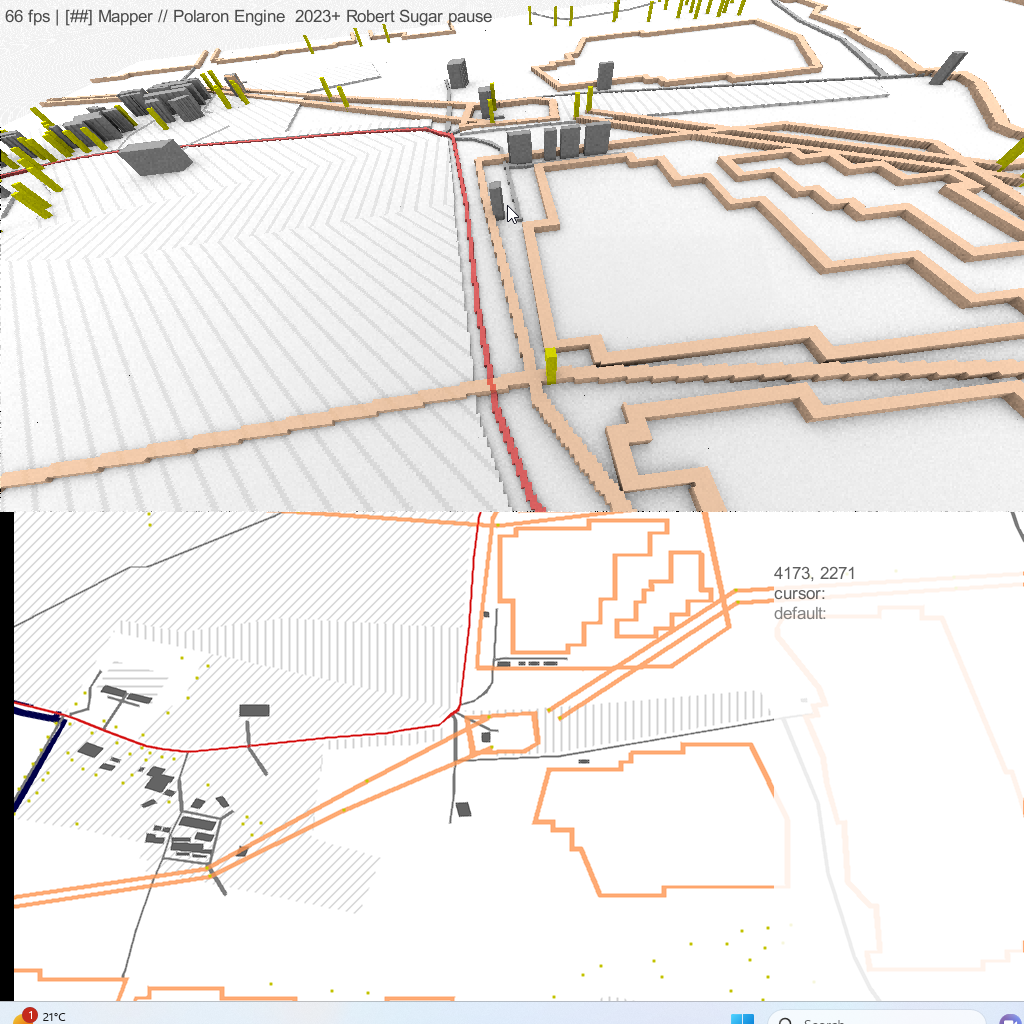

One dataset – many LODs

Choosing the LOD and data to pack into a scene is really simple – allowing the preservation of source data and even to have multiple LODs running in parallel or cooperatively – to allow you to see how effects at the tactical level impact the operational level.

Field, fluids and waves

Owing to the Voxelised nature of the world the propagation of waves, fluids and fields – that can dictate and simulate behaviour of agents and actors, rays and waves and even penetration, fire and flooding, gives a lot of opportunities to exploit large scale and complex interactions

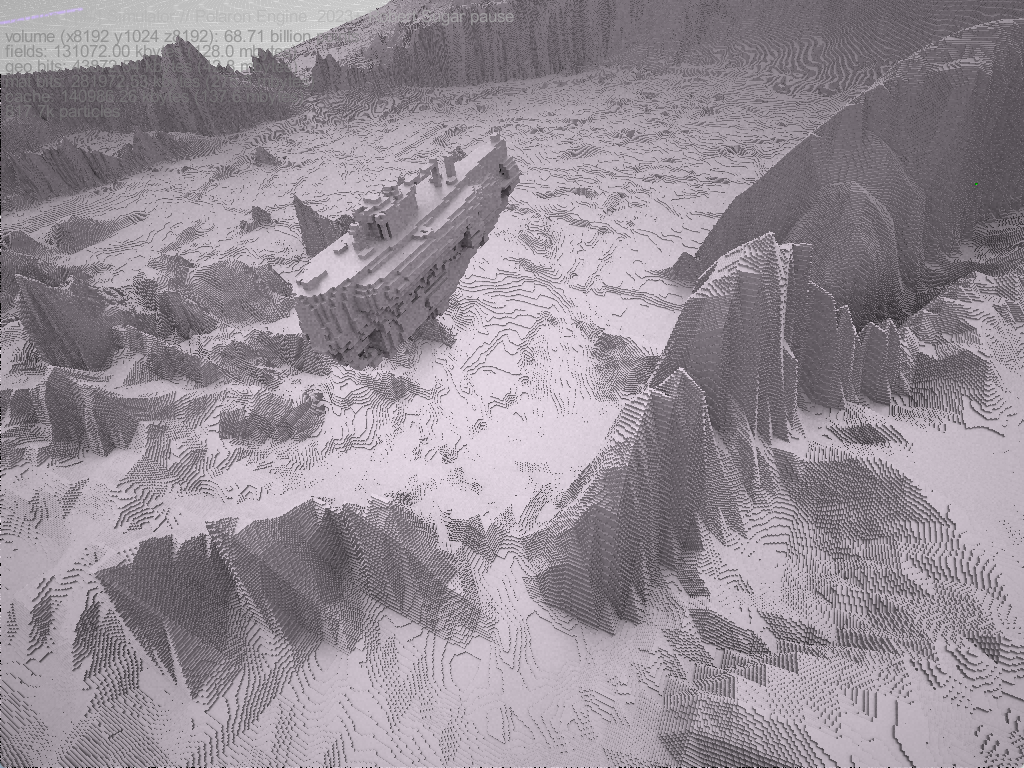

Games & Game Environments

Whether it’s fighting over a city or continent or going room to room, we can represent the destruction and complexity of Urban and complex scenes to bring highly dynamic environments and immersive experiences to life.

Enabling data editing and traversal in realtime

What this means is that you can edit and play with not just the physical terrain but the values of the data that effects it, the values of buildings and soon the people that traverse these spaces. Infrared data creates a heat map – which can also be analogous to the fields and push and pull factors that allow us to layer complexity into a scene – as well as preserving node and graph based systems – such as power and water – and have their effects propagate out over large areas.

This means that we can host incredibly complex layers of data – that interact. E.g. a Fire makes an area hotter, a heatwave can impact on services and population behaviour. Heat blooms from cooling or heating units, etc. This can also be applied to Voxel models of vehicles and entities that traverse the terrain. Here’s an example of a generated tank asset.

The same asset then painted with different colour values, including emitters, which represent systems, infrared, and weakpoints. These can be programmed to allow a weapon to penetrate, a sensor to see the vehicle or a system to be damaged or destroyed – which effects the other associated entities. This becomes more important as Physics is introduced.

It also works for a range of different assets – from sci-fi spider tanks, to walking units and alien units in gaming, through to complex structures such as bridges or infrastructure. This allows for the painting in of procedurally generated appendages or motions – be it a turret, leg or armature.

These are lower fidelity models, some derived from Ai for the purpose of testing a variety of different capabilities. Production assets will be made by hand to ensure precision and mapping of systems. Whilst Ai derived artwork is a great testbed – given the thousands of variables we’d want to encode – this looks more like a procedural pathway with an Ai / ML approach to augment this (else please see our investor page as this is something we’d love to engage with more).

We are looking to also expand on the Open Source format .Vox to provide a level of interoperability into the large number of voxel assets and artists – with our own tools providing a means to augment these.

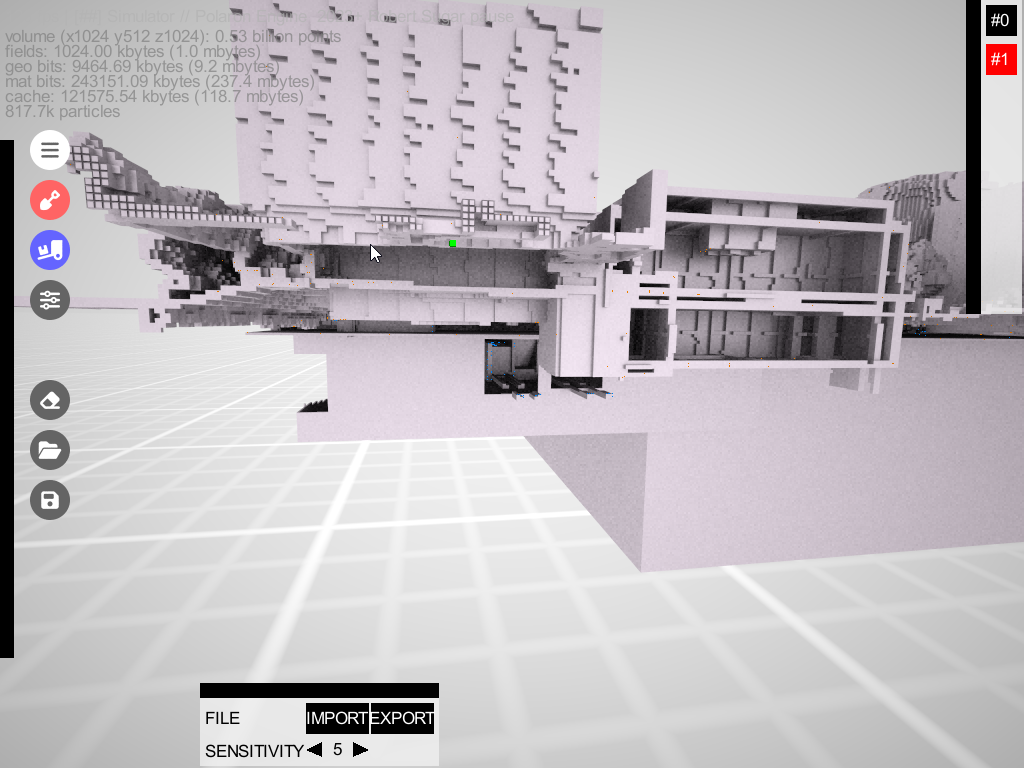

The same approach can then be taken for buildings – in effect combining the human hand and procedural generation to populate systems and dependencies – from heating, to sewage to lighting and emergency exits, stairways, floors and clutter.

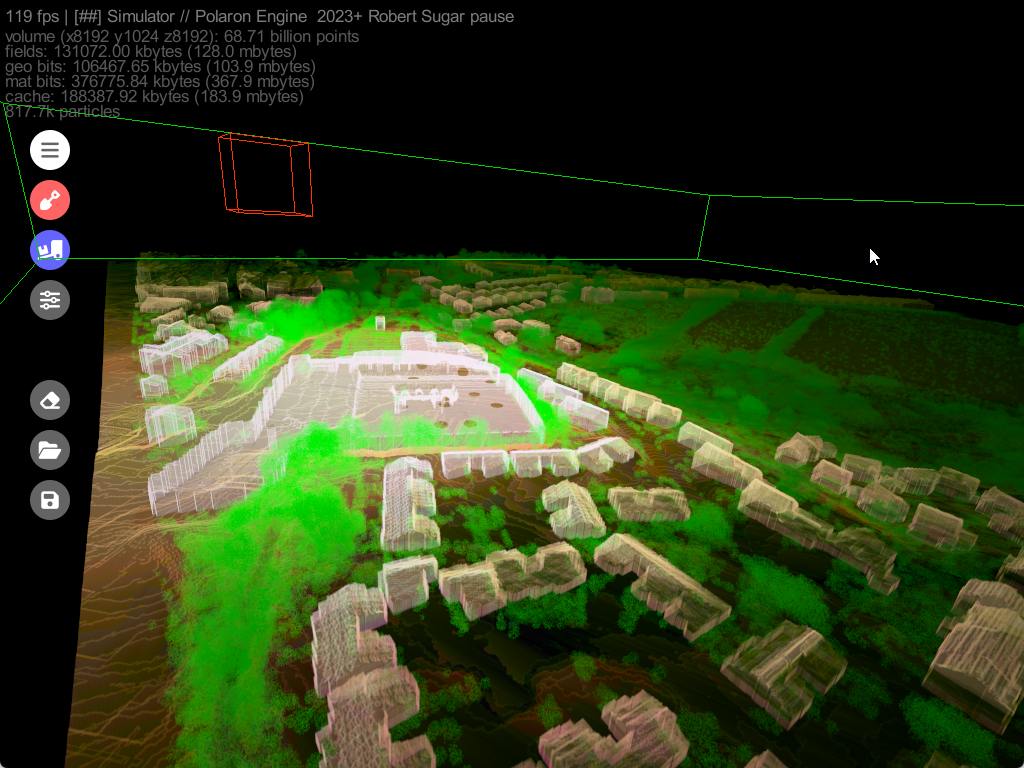

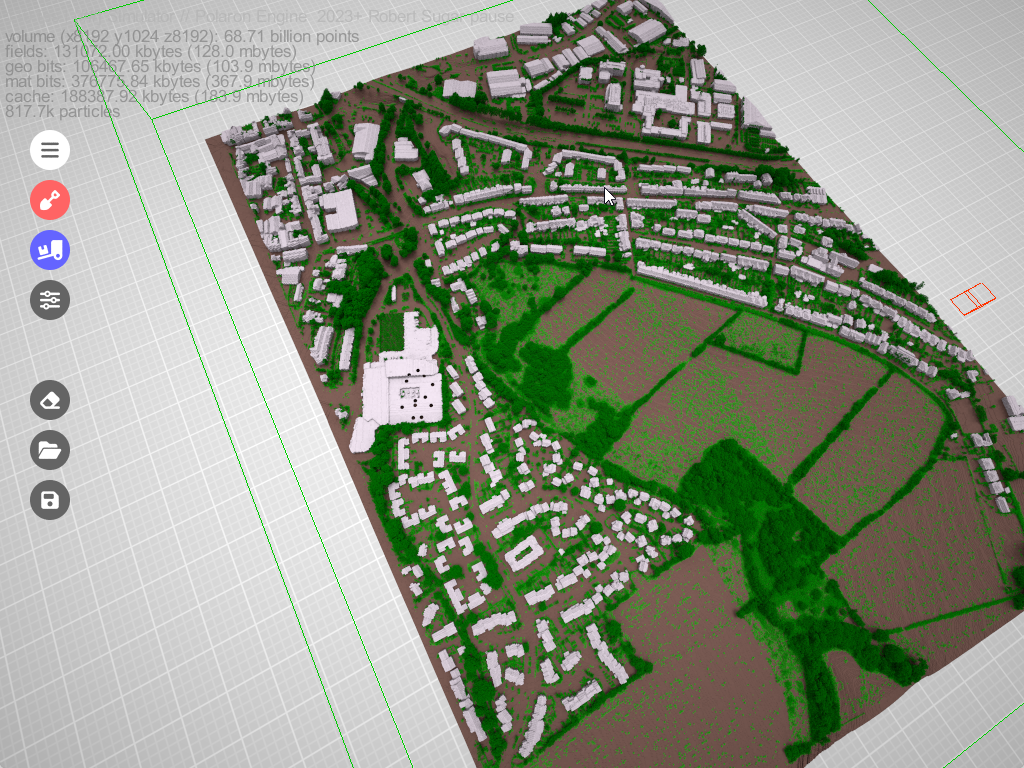

The above shot is a WIP model of Newport on the Isle of Wight derived from Defra Lidar data, from which we can then generate semantic profiles for each building (using OSINT and other data) to create a synthetic representation of that space, its occupancy, usage and the systems that keep it running (both people and infrastructure). Indeed we have some thoughts around our ability to power other tools and games – based on Polygons – by using these base semantic models to allow whole areas to be synthetically generated and perhaps more interestingly – to be analysed against change.

Stripped of the cosmetic and height data – you can still edit – as if it was a 2D map – and see the consequence of those actions or changes in 3D. Whether that’s introducing a power cut, or sending in a giant mechanical robot.

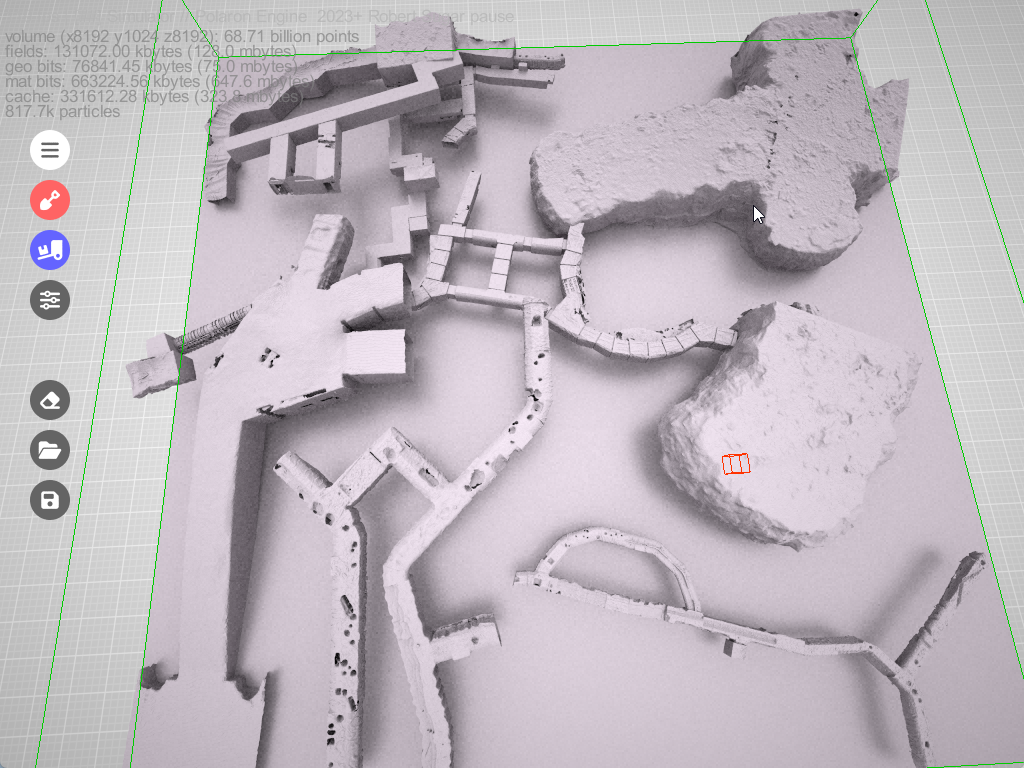

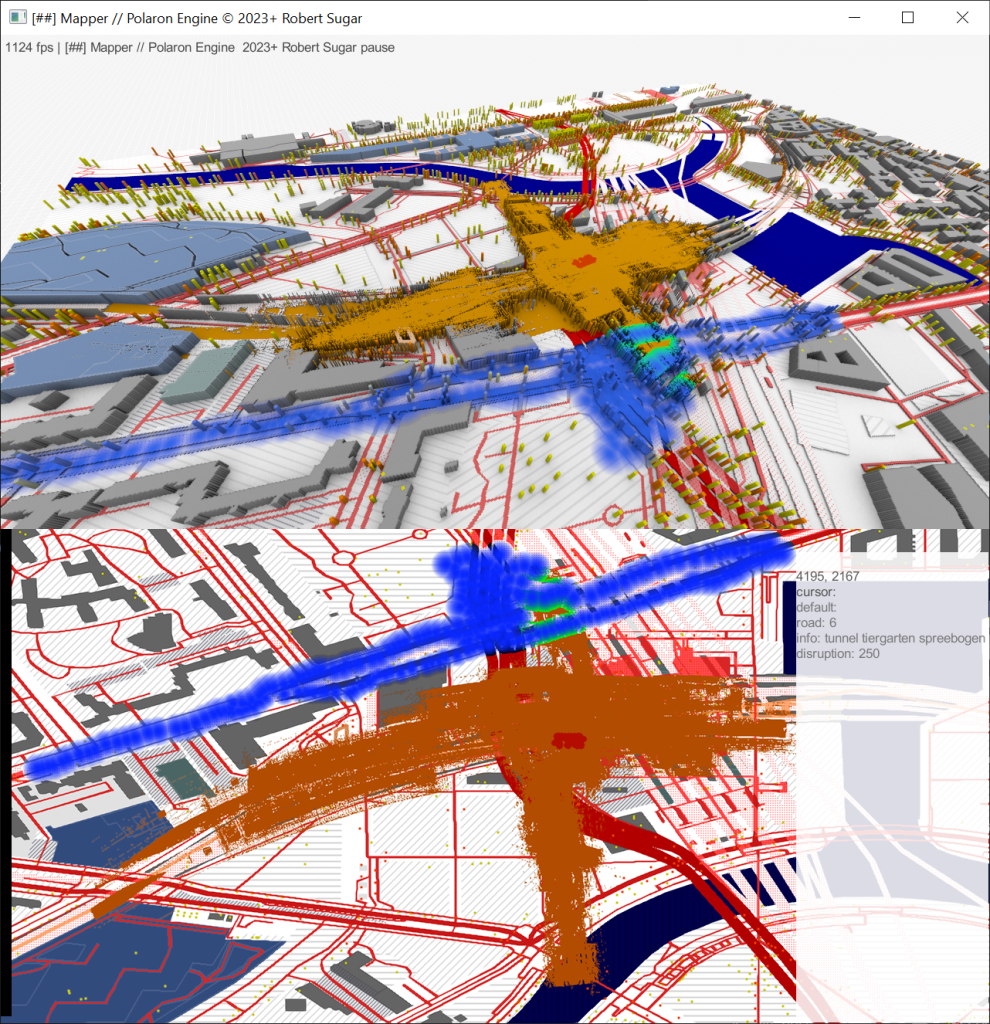

Or modelling pathfinding on real infrastructure and locations (Berlin central station)

Or zooming out to model how a protest might effect transport at Berlin central station

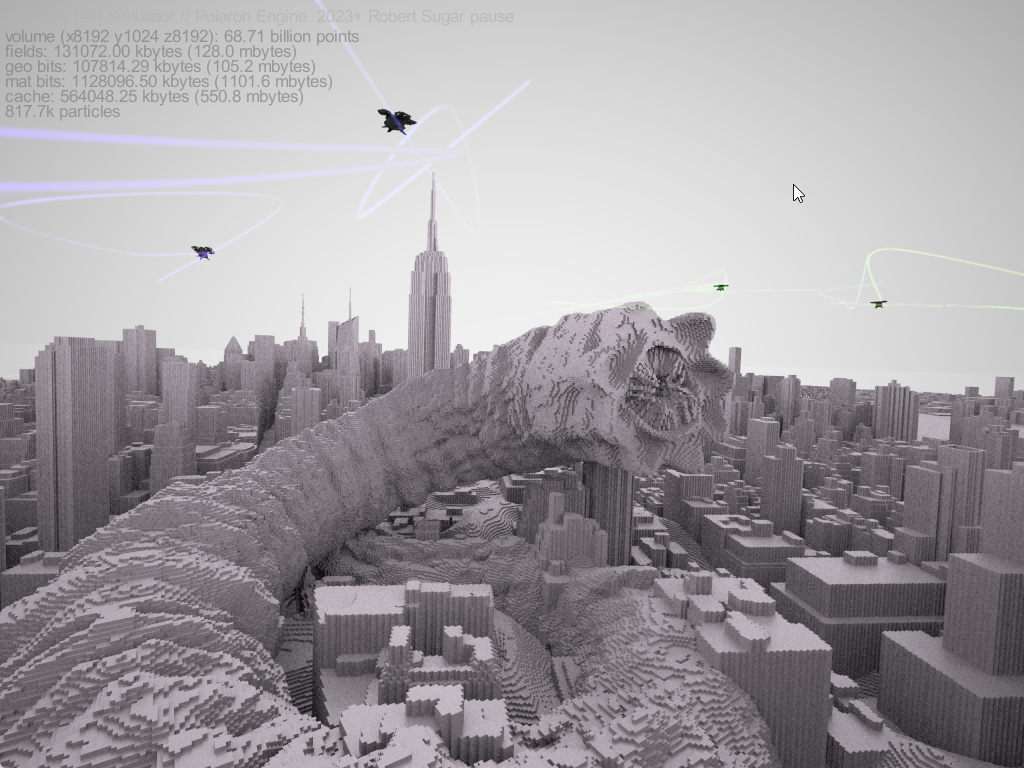

Or gaming out the effect of Shai-Hulud emerging in the middle of Downtown Manhattan NYC

The process of packing data into the voxels at this scale – allows for incredibly complex simulations, physics and is more closely routed in the real-world versus in the artifice of the most aesthetic engine.

It is possible to profile environments and assess them for access, landing points and a whole host of other criteria. Since areas can be “chunked” this can be run at high resolution and written back to a larger terrain model – similar to how cloud native point cloud formats sub-divide clouds, or even how SLAM systems composite scans and sequences of frames to combine sub-maps into a global map.

We’ve done a lot of work on different datasets – including bathymetric data and a variety of point cloud formats – even Wall penetrating radar.

With each application we’ve refined the engine in order to bring our users the best possible experience and the highest performance analysis tools. Turning scans to simulations, terrain models and providing the basis for amazing experiences.

Well done for wading through that all!

We hope you find it interesting and would love to hear your thoughts.