Urban Hawk was asked to provide the computer vision capability to use object detection and proximity relationships on public transport – using 5G to then pass this data back to an edge based system to establish whether baggage has been left innocently and match school bus pick up and drop off locations to waiting parents travelling on public transport as well as incident detection.

#1: lost object detection

Track passengers on board of public transport buses (extendable to other methods of transportation, such as train, tram, etc.) via the CCTV video feed.

We link objects getting on board with these passengers, and trigger alerts when a passenger gets off but its object stays on board.

#2: on board sickness, violence, and accident detection

Through the same CCTV feed we track the body poses of each passenger travelling on board, and detect unusual scenarios. Such as sudden moves, falls, hits (due to a sudden emergency break for example).

Lost Items & Baggage

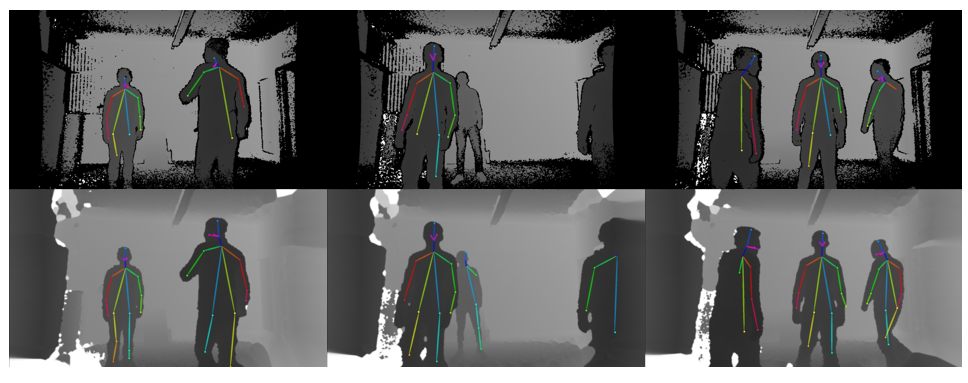

We have reached temporal coherence in live video feeds with stable pose estimation on multiple people. We extended the detector to basic objects such as bags.

This means we can follow each pose and trigger an alert when they enter or leave the bus – as well as a correlation between the user and an item. This detector can then trigger an alert regarding linked objects (linked to the pose / the passenger) accordingly.

This allows us to detect left behind items and associate that to a given user

Detectors passing location and live volumetric data into edge and 5G connected real-time simulations

With a stable detector we have been able to identify the capabilities for delivering the following functionalities – leveraging high bandwidth connectivity.

- Stable pose tracking from a single camera in varying light conditions. Study and model the extremities of perception to provide a reliable and consistent year round service.

- identify the meaning of poses e.g. sitting, standing, laying on floor and their context such as recovery or timing pose changes

- Track movement within the bus or carriage and any zonal signifiers e.g. in a spot reserved for passengers with disabilities, presence of mobility assistance object such as a wheelchair

- Identify hazardous movements (fall, hit against, violent and sudden), and trigger an alert

- isolate people/human bodies from the imagery, and compare the remaining image areas against pre-stored ’empty bus’ captures

- detect new objects (baggage, box, clothing)

- Study if the objects can be classified to a certain extent, and if that is viable, or just to track them as objects of any sort

- assign new objects to people (new passengers who potentially bring them on board when boarding)

Tracking when a pre-assigned passenger leaves the area but their associated baggage isn’t taken.

This allows for a high confidence understanding of baggage and people and the association between them. Allowing a quick remediation of left baggage – reducing security intervention and disruption as well as enhancing passenger satisfaction.

By pushing this into Polaron – we can produce a real-time synthetic or directly observed twin of the space or area – then site that inside of a larger volume.

Adding in business logic & further expanding confidence

The real world is messy and sometimes objects or baggage may be passed between different people – such as if handling children’s baggage or offering to carry luggage.

- Handling of baggage where there isn’t a prior association to that passenger. Allowing potential theft or incorrect bag pickup

- Observer “reasonable” baggage exchange versus non-reasonable baggage exchange such as assault or theft via combining different factors to create a high confidence detection

- Trigger alerts when falling (emergency breaking) or to suggest if there is a medical emergency – such as an unconscious passenger. This could also leverage additional sensors capable of detecting breathing rates and other life-signs.

- Develop a smartphone app interface that displays the alerts plus delivers infotainment or other information – potentially prompting driver or other passenger intervention or actions.

- Passenger profiling against ticketed numbers – to allow for detection of students, minors, elderly versus expected typical full fare payers.

Test case & Methodology

CCTV camera(s) was installed in the school bus, that is transmitting images with frequencies from 5 frames per second and upwards to the 5G Edge node where the face recognition algorithm resides.

Children, whose faces has already been scanned and analysed and identification tagged beforehand are occupying the seats randomly. The Edge algorithm identifies the children and signals the Back-end with the list of the boarded children. The Back-end has the list of children that are expected to be found on board, and also the list of the other pupils who attend the school otherwise.

Three more children board the bus. One of them is expected to be on board, the other one isn’t but is a pupil from the same school, and third one is unknown (not a pupil in the same school).

The Edge algorithm identifies both of the first two and sends their data over to the Back-end. The Edge algorithm doesn’t recognise the third child, therefore alerts the Back-end, which then alerts the bus driver, the school, and the transport operator about the potential problem of a misplaced child.

Then a random child (from the group which the system managed to successfully identify) gets off the bus but leaves his/her bag on board. The Edge algorithm identifies that the child left through the door, and also marks the left behind item. Then alerts the Back-end which triggers all relevant communication: compares the GPS coordinates with the child’s expected getting off location and sends alerts accordingly (bus driver if the getting off location differs from the expected, transport operator, and the parent’s Front-end)..

The project required us to take a holistic view of systems and systems integration. Measuring both existing KPIs and metrics as well as adding and extracting additional value. A result has been the replacement and renewal of multiple systems to deliver the required efficiencies and benefits – allowing far greater interoperability and interactions between systems..

KPIs met:

- Detection for face recognition error rate, lower than 10%.

- (with refinement – this could easily be below 3-4%)

- Unwanted item detection (on board of the bus)

- error rate, lower than 20%

Outcomes for child safety assessments

The use case exploits 5G technology to allow one sensor and processing hub – to drive many different experiences and capabilities.

1. Face detection of children – alerts received by parents to advise they have successfully boarded or left the bus

2. Identification of suspect lost items in the bus – alerts received by the CCC

3. Identification of a sick person in the bus- alerts received by the CCC, forwarded to the intervention team in the ambulance

4. Identification of a violent attitude of a passenger – alerts received by the CCC, forwarded to intervention team

5. Passenger profiling against ticketed numbers – to allow for detection of students, minors, elderly versus expected typical full fare payers.

Technology & Connectivity

- CCTV camera on board of the bus (any camera, preferably RGB) – which permits the rapid transmission of data – else the use of an Edge computing node.

- In this case a modest PC with CPU and GPU (Nvidia architecture from GTX 1080 upwards; minimum of 4GB VRAM) and minimum of 4GB RAM.

- Runs on Linux or Windows.

- GPS transmitter on board of the bus. Mini computer with 5G connectivity for data forwarding from bus to Edge including camera footage and GPS position.

- Back-end consists of a PC with CPU and minimum of 8GB RAM. Front-end is an Android smartphone and a password protected website portal.

- The Edge ran on Windows for testing, Linux for later commercial deployment;

- Tensorflow used to run the pre-trained neural network that is responsible for the face detection and identification.

- Back-end holds the pre-scanned datasets (that are synchronised to the Edge nodes from here) and the communication actions list that are stored in the same SQL database.

- Front-end in Xamarin (for greater portability in the later commercial phases), but Android only for the time being.

- Web portal in PHP or Javascript.

Find our more about Orange France & Bulgaria’s work on Safety and Security here: