Polaron is the flagship product of Urban Hawk Ltd – it is a combination of technologies that allows for the ingestion of point cloud data, EOS (satellite) and semantic data (i.e roads, building profiles, etc). This produces a very large scale model in which to traverse and interact with. Whether for gaming, serious simulation or analysis. We’ve compiled some FAQs in response to frequent requests from Urban Hawk clients and requests we’ve been asked to respond to. If you have more questions or suggestions – please get in touch!

FAQ

Do you provide product support ?

We’re working toward provide a stable and robust product. We’ve spent a lot of time to develop efficient memory and resource management tools as well as a range of handlers and error checking methods.

This provides a reliable and robust software service that can be hosted or run locally (depending on the use and licence).

For commercial applications we’re happy to offer an SLA (at cost) which includes up-time and support.

If you’re an early adopter or client – we will endeavour to offer support via a typical triage and ticketing system – as well as providing a tool for real-time comms (Google Chat / Discord) depending on your level of support.

We will also endeavour to push regular updates and bug fixes (both incremental and minor fixes as well as larger updates). We are working towards an automatic test wrapper – that will allow us to perform daily / nightly builds and ensure the necessary quality assurance expected of a software product. But likely this will come in 2024 and is again quite dependent on the use case.

Finally we are looking at hosted / managed solutions for some applications which will have the first updates and priority attention.

What currencies do you accept for payments?

Currently we accept GBP & Euros and are likely to adopt a 3rd party system for payments to enable the Freemium model.

All prices are stated in £ unless otherwise indicated. We are also happy to consider arrangements with larger volume users that are more economical owing to the economies of scale and cost to serve. Please contact us for this.

For Games we’re looking at the usual distribution channels of Steam, Epic Store, etc – as well as considering an Early Access or Crowd supported model.

How much does it cost?

The game product will likely retail around the typical steam mark for a high end PC Game.

Watch this space for more details.

For commercial applications its a case of the software licence, our time in development (if custom requirements are needed) and any hardware or operating costs for running the service and providing support.

We’re very economical and comparative costs to other GIS tools and services. Contact us for a specific quote.

What is Polaron?

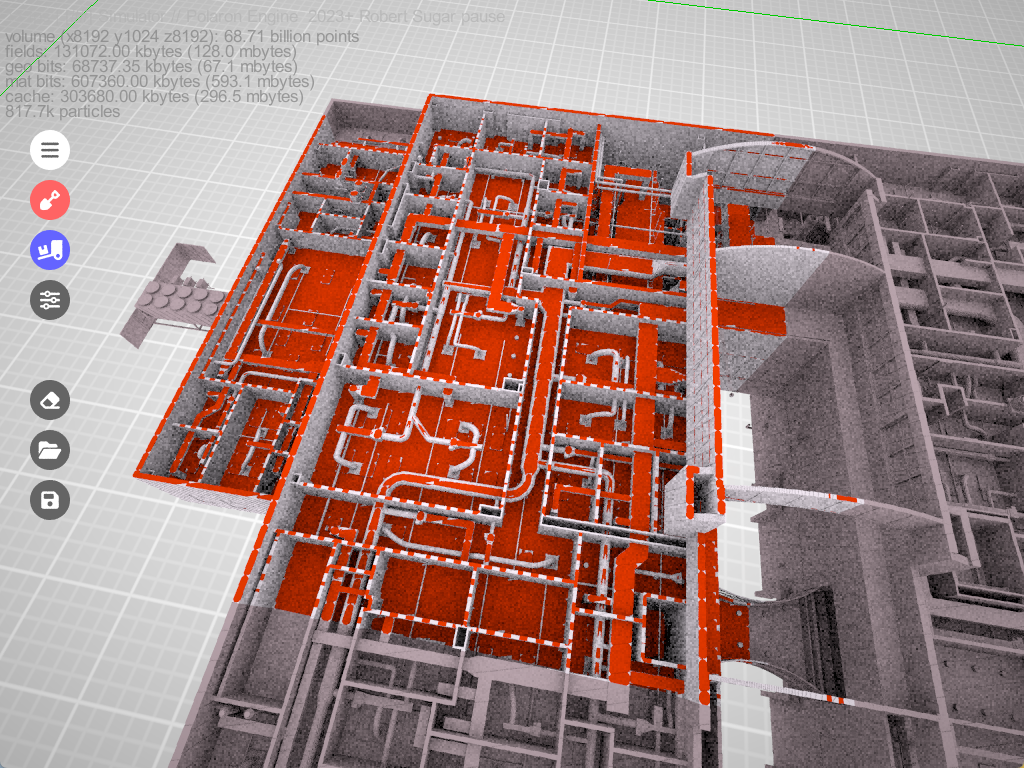

Polaron is a volumetric simulation engine. The easiest comparison is Minecraft – but at a far higher level of detail – where instead of stone and rock encoding and ray casting – we can encode the physical, RF and semantic values of each “block” which allows us to the propagate through that environment. To continue the minecraft analogy – imagine releasing several thousand autonomous characters that have rules as to how to alter, update, build and traverse that environment. This gives the capability to quickly update data and perform complex queries and simulations – from RF to Pathfinding – set against an exponentially growing number of rules and layers of data.

We can’t do everything all at once – but we can sub-divide tasks and optimise recurring simulation layers or integrate into other systems and tools, cache and adaptively scale the detail or packing of data into the simulation.

Is it a 3D / Game engine?

Great question – and it could be – there are plenty of Voxel based games out there – and voxels and 3D pixels are a component of rendering tools, NeRFies and many more.

When an engine is made – you have to choose where to focus and optimise – and Polaron is optimised for large scale simulation in 2D and 3D. You can see more about the pro’s and con’s of voxels in general terms elsewhere on the site.

One of our core benefits is to bridge the gap between 3D simulation and 2D simulation – for instance allowing you to see the patterns of life and changes in a building – in context of the wider city, area and transport links that it sits in. This adaptive layer – allows us to quickly fetch and assemble varying levels of detail (LODs) and different layers.

This enables users to examine how changes in one domain are impacted by and can effect changes in another – and manage externalities such as logistics, delays and infrastructure dependencies and costs. So whether that’s feeding the fight, pathfinding or adding in the effects of damage from physics – we have the scale and complexity to do a lot of this in real-time.

Games Industry tools perform well in small scenarios (they were designed to do that). They are fast and provide fascinating visuals. But they break down in the scale we operate in or when we flood them with real-time data;

Geospatial tools do cope with the scale but fall behind on features, speed and overall accessibility and look – or require very large cloud based services – to provide a means to simulate – which are often tailored for GIS / EOS (i.e satellite data) mapping – and don’t readily encode data into 3D.

Whilst it’s a bit “horses for courses” we believe that in the simulation space – Polaron is unique in it’s capabilities and performance. Polaron builds in the middle where the biggest capability gaps lies. In voxelized environments we can compress spatial data efficiently while keeping access and traversability robust.

Windows or Linux based?

We use Windows (11) as our main development platform – but can install this via a container to allow it to run on a variety of systems and platforms.

The core software is written in C++ – so is fairly transportable – however to avoid supporting Dev builds in both Windows and Linux – we’ve focussed our efforts on Windows for now. We will likely be porting to Linux next to allow better scaling.

We employ WSL for docker container use already and perform a range of other tasks via Linux based devices (e.g. Computer vision) therefore it’s a case of when not if.

We are likely to run off of Linux containers in a hosted or cloud instance – its really a case of justifying the investment in time versus other demands.

Is your product compatible with Linux Servers ?

As we’ve said above we think this is coming in 2024!

We already use some Linux containers for some supporting services. So it’s a question of time.

We have already sent data over Linux containers using Port based APIs and WebRTC – so there’s no barrier to interoperability.

Can I use Polaron to Simulate Sensor data?

Yes! The speed of the DB is now limited by the CPU throughput (the conductor of the orchestra) and much less than the GPU Parallelisation (breaking down a problem into smaller chunks). What that means is we have enough speed and performance to allow us to pull in and instantiate real-time sensor data – whether that’s RF, LIDAR or Depth Camera data. This allows us to quickly ingest and present a real 3D model of a space – and still perform simulation on that space.

You can see an example here – where we use wall penetrating radar data to present a visualisation of the data (not the object) through a wall. Worth checking out Wavesens who are one of our RF and Scanning Partners.

Backend integration & running headless

We designed Polaron to run as a backend service – to process data – then surface and produce a tangible GUI to provide a “street view of data”. The heavy work is therefore done mainly at back-end.

It is computationally heavy, and scales well onto networked CPU / GPU instances (*and we think onto clusters).

Not all use cases need the GUI though – for instance we’ve worked with Fraunhofer to issue data over 5G to offer Unity based Edge Simulation, whereby Polaron provides the simulation but can also render and stream a mesh or data into Unity.

On low end devices (such as smartphones) we can use WebRTC and similar methods allowing the end user to just pull data from the back-end and display this through conventional platforms or any web browser (similar to game streaming services).

Our focus is to simultaneously keep building Polaron’s rendering pipeline further, and we are considering a light weight front-end as well for remote access.

We’re currently working on an API gateway / Platform that makes pulling and pushing data to and from your simulation easy – allowing Restful API integrations and large scale data transfers. This becomes very case specific but in essence if you can stream it as live data, JSON, XML, etc we can ingest it and render it as fast as it can be read.

For big data work we maintain an “unpacked” OSM DB that runs circa every week / day – depending on the scale and frequency of update.

But you can integrate other layers of map data – both open source and private. We’re hoping to come to terms with some additional providers soon to add to the available data to players and end users – or facilitate them to use their own.

Can I customise Agents and Simulations?

Yes!

We already have a scripting language that allows you to structure your data and the interfaces of the Cellular Automata or Agents and Queries into the terrain and semantic data.

We are working on a marketplace for the B2C and B2B to allow this be done via a web interface – as well as a native C interface allowing you to interact and tune your simulations.

We’re looking at integrations into other data providers to allow 3D models to be easily ingested from other companies services – as well as making it easy to overlay large 2D and GIS scale data sets then display that back in 3D. E.g. Height maps / DTM / Demographic data etc.

Again this can be tuned and optimised for your needs. Our view is that we want to make the tools that make it easy to produce the simulations, games and queries you want. Aligning this to other tools, services and systems makes this more effective.

How does it work? What’s the underlying technology?

We store and process terabytes of spatial data and organise the scanned RGB + depth info (we get from sensors) in a voxel like spatial database managed via grids. This is lossless but very efficient, and when combined with efficient data packing and accelerated with distance fields at the higher levels in the hierarchy this allows for incredibly fast traversal of large scenes and simulation to be performed over very wide areas.

Like any software – there are limitations of the resources it can consume – therefore a lot of development time and iterative refinement has been undertaken to provide an optimal balance between compression ratios, memory access/manipulation, and traversal.

What this provides is a volumetric database that is able to offer the basis for physics and volumetric AI to traverse at very high speed (real-time in most cases – ie measured in ms) allowing for the instant feedback from proposed changes and events in the scene – driven by user interactions and real-time sensor data.

How large are the point clouds you can you support?

The current pipeline injects up to 20 million points per second from depth sensors.

This is however increasing all the time – primarily to hardware defined limits.

We can render 60 Billion + Voxels on a COTs GPU / CPU while maintaining rendering at interactive rates on a single RTX 3090 GPU.

We are not aware of any other technology being capable of the same. This continues to increase – as faster GPUs become available, RAM sizes increase and CPU performance improves – as well as hundreds of smaller software refinements that incrementally improve the overall performance.

From 2021-22 we improved net performance by over 50% with an expected incremental improvement of that magnitude in 2023-24.

We can support up to 68.8 Billion points – how that’s used determines the space and size of a simulation – essentially an 8K resolution at 8kmx8kmx2km at 1m2 resolution – though this can be much larger depending on the use case and density, etc

Speed is a feature.

Realtime means real-time – which means running at 30fps > 140fps.

How big a scene can I view and how much can I display?

Scene size: max 16k^3 voxel grid on single GPU; Optimised max 256k^3 on a GPU cluster.

(Theoretically this can be much much larger but we have not yet tested higher yet – but will do in time – likely on our managed hosting / cloud service).

This depends on the data density and scale and how much of a given scene / area needs to be simulated in one scene. For instance London is 1,569km2 so could be fitted in at a smaller resolution – else use varying LOD and network simulations to present the entire of the City.

Additionally some areas don’t require as much data – e.g. London has a lot of verticality and subterranean features, rivers and a fair amount of height and 3D data – which other areas don’t.

It is also possible to adopt an instantiation approach – where we don’t load all the data into the sim – until needed – allowing for efficient systems of simulation supported by intelligent caching mechanisms.

Finally we’re working on adaptative scaling – which you can see here – that allows some areas to be adaptively packed and filled.

How is data stored?

Info stored with each voxel: Any custom data next to grey, rgb or rgba colour channels. This is a highly efficient means of storing data – however there are means to allow interfaces with external SQL / other DBs to allow keyed data – and the variation or extension of a given value. We’ve yet to find a use case where 256 values per Voxel would be required! Since each encoding can also be a scaled value – or reference other tables.

This flexibility allows us to simulate physics (including fluid dynamics to run flood simulations and other natural disaster scenarios) and easily ingest a huge variety of data types in different formats (and reliability) as well as permitting users to “paint” in their own data.

But does it look good?

Polaron supports the usual range of 3D functions – Physical materials: reflection, refraction and light scattering and we will be integrating NVIDIA DLSS soon to provide even more attractive visualisations. There is the potential too to tie some of these systems back into the Voxel data store – which is something high end rendering pipelines do – to sub-divide the world into manageable and cacheable chunks.

Polaron is primarily a simulation tool – but does have a “make it look good” button for presentations and to allow more attractive looking models to be captured. Based on customer feedback – accuracy of the simulation data trumps the aesthetic most of the time – whilst not forgoing an attractive and easy user experience.

What about Rendering?

Fully path traced (progressive spatio-temporal re-projection). When interacting live – you might see some fuzziness around the edges – which is the Ai agents busily at work re-calculating the scene based on your viewing position (frustrum). Given you can fly through objects and scenes we can provide different layers of caching and rendering depending on your specific needs.

What 3D formats do you support?

Polaron allows importing from conventional mesh formats including: PCD, OBJ, elevation maps, shapefiles, as well as SVG are already supported.

We can also import DTM maps and large geospatial datasets – and export to allow interoperability. E.g. Work in QGIS but simulate in Polaron and send the results back to QGIS with spatial co-ordinates.

Anything that has a spatial aspect to it can be ingested – and many of these can be exported. We have adopted WGS84 as our spatial project standard for now – as well as OSM and Ordinance Survey Triplets.

What about Rendering?

Fully path traced (progressive spatio-temporal re-projection). When interacting live – you might see some fuzziness around the edges – which is the Ai agents busily at work re-calculating the scene based on your viewing position (frustrum). Given you can fly through objects and scenes we can provide different layers of caching and rendering depending on your specific needs.

What 3D formats do you support?

Polaron allows importing from conventional mesh formats including: PCD, OBJ, elevation maps, shapefiles, as well as SVG are already supported.

We can also import DTM maps and large geospatial datasets – and export to allow interoperability. E.g. Work in QGIS but simulate in Polaron and send the results back to QGIS with spatial co-ordinates.

Do you support SLAM systems? What does that mean

Simultaneous Location and Mapping (SLAM) is the process by which a robot or sensor can be moved about an environment and recognise the change in it’s location and generate a map of the area it’s visited – fusing this to create a “global map” from various smaller sub-maps (think frames of a video being converted to a full motion video – but for mapping).

We have demonstrated this with complete SLAM systems (kindly supported via the Oxford Robotics Lab) from sensors such as Monocular cameras (using structure from motion) and Stereo cameras (both depth perception and self supervised learning – aka neural cameras), Lidar (direct from point cloud), Leica BLK2GO, Frontier / NavLive handheld scanners and more.

We can stream or inject this into the volumetric scene as it’s being recorded – and provide a real-time update. We’re also looking at other sensors appended to that location data – such as RF, Thermal and even Geiger Counters – to allow the mapping of other spectrums of RF Data to further augment the scenes.

20 million points per second – offers a high resolution for spatial data feeds, whether lidar, radar or other systems.

Semantic segmentation

We have developed a volumetric AI – trained on a variety of volumetric data and objects, that automates the feature detection of objects in a scene and then annotates the voxels – e.g. this is a bench, this is part of bench-1, bench-2, bench-3 bench-with-a-single-person-on-it, etc. This is something we had been developing.

However the giant’s have massively outperformed this with tools such as Segment Anything Model from Meta and SAMGEO and many others able to quickly segment areas allowing for annotation and machine learning. It’s something we want to come back to (see our investment page) – but for now believe we’d be better off using these tools – and where open source and copyright adherence allows – adopt them into our pipeline.

Interestingly we can use geo-semantic data (descriptive detail about a location, object or place) to generate procedural facsimiles where sufficient data exists (e.g. inside of buildings etc). Therefore we can still ingest the end product of these 3rd party and external tools to generate the “street view of data” and relationship between different objects, entities and changes.

Noise and error can be manually corrected, easily with the help of the built in editors – in effect as simple as painting in an object – then letting the Ai know what is / isn’t a given object – as well as modifying density of clouds, tolerances, etc.

If this is of interest to you – please let us know as we can entertain a partnership to help augment your services.

Cloud based Point Cloud Processing

We’ve engaged with Vercator a UCL Photonics spinout that specialises in Semantic Segmentation and Automatic Point Cloud fusion and cleaning – allowing very large scans to be digested and pushed into our system with the annotated information already encoded. This is beneficial for us as it provides a baseline from which to then automate Polaron’s own Ai / ML encodings – allowing future updates (e.g. one area that’s had a lot of change) to be automatically ingested and detected.

Ultimately cleaner data makes for cleaner models – and Vercator’s online tools make it really easy to quickly remove people, clutter, surfaces, etc – to get you to the type of data you want.

Since we are primarily focussed on using the end product of these steps its

3D Editors & Tools

We have a number of GUI editors and Command Line and Text based editors.

1) Voxel sculpting tool (add/delete via numerous brushes, change colour and custom properties including annotation) which can be used in a destructive or non-destructive manner (e.g. Remove something from the scene – without deleting the actual data)

2) Mesher

Import and manage data sets and align data, scenes.

3) Simulation / Game layer

This lets you interact and play with the data and agents, simulations, and terrain in real-time.

In addition to these we have a command line console and tool – and can expose the configuration and customisation files to allow text file editing. For instance with OSM you can choose how to layer data – what colours and even what logic or lookups to use.

Web based editors

We have a mobile GUI – which allows a user to add / highlight and edit a file from their phone. Which was tested with approx. 10 concurrent users on a single simulation – with different read/write rights and access to different layers of data.

This was developed as part of 5G-Victori a Horizon 2020 Programme.

API connections

We paused our API gateway development but got as far as exposing some of the APIs to allow integration. It increasingly looks like it makes sense to allow an accompanying marketplace that allows for data and file exchange, config and modding.

We want to both allow serious developers as well as simple editing and importing of a community or existing models and agents, ai and resources in the works.

By allowing similar levels of customisation via a simple web GUI and reducing the challenge to use our software and products and permit edge based queries to be quickly passed and complex queries generated from limited edge based inputs.

We’ve begun some early work on graph database interfaces into this – especially with NLP and Chat GPT type natural language queries able to then generate very complex queries.

Unity Integration

We are currently working with a highly respected technical developer to equip their multi-user AR / VR Unity experience to our Simulation. We want to be able to not only build a Unity scene but consider how we can update and refine it to bring the best of a 3D gaming experience driven by real world simulation.

However before we worry about how we power another 3D engine – we want to ensure our own interfaces are up to scratch first.